Designing Trust in the Age of Algorithms

Paper Reference: Trending Research Topics in 2025 – Academia Scholars

1. The Algorithmic Mirror

Imagine a world where your medical diagnosis, loan approval, or legal sentence is determined by an algorithm you cannot see, understand, or question. As artificial intelligence becomes embedded in critical systems from healthcare to criminal justice, the opacity of “black box” models raises urgent ethical questions. The 2025 research frontier asks: what if we could make these systems not only smarter, but also transparent, accountable, and fair?

This isn’t just a technical challenge, it’s a moral imperative. The future of AI depends not only on performance but on its ability to explain, justify, and respect human dignity.

2. The Bigger Picture: Societal and Technological Implications

- Bias and Accountability: AI systems trained on historical data often replicate and amplify societal biases. Without transparency, these biases remain hidden and uncorrected.

- Trust and Adoption: Public trust in AI hinges on its explainability. If users can’t understand decisions, they won’t accept them.

- Governance and Regulation: Governments and institutions are calling for frameworks that ensure fairness, accountability, and transparency (FAT) in machine learning.

This research field is not just about improving algorithms; it’s about designing systems that honour human rights and democratic values.

3. Enter the Researchers: Architects of Ethical Intelligence

Interdisciplinary teams in 2026 are merging computer science, philosophy, law, and social science to build ethical AI systems. Their goals include:

- Creating interpretable models that reveal how decisions are made

- Embedding ethical reasoning into algorithmic design

- Developing tools for auditing, visualising, and contesting AI outputs

These researchers aren’t just coding; they’re crafting the moral architecture of the digital age.

4. The Investigation: Building Explainable Systems

Key innovations include:

- XAI Techniques: Methods like SHAP, LIME, and counterfactual explanations help users understand model behaviour

- Human-AI Interaction: Designing interfaces that allow users to query and challenge AI decisions

- Ethical Frameworks: Embedding principles like fairness, non-maleficence, and autonomy into system design

The challenge is balancing performance with interpretability, ensuring that models remain powerful without becoming inscrutable.

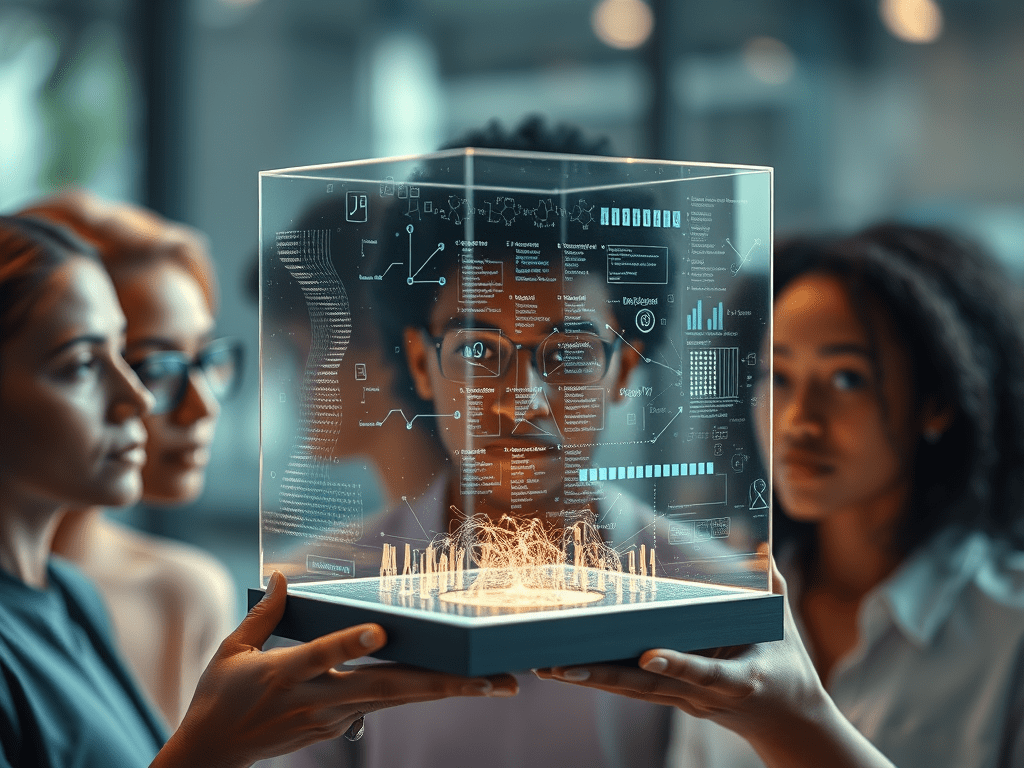

5. The Breakthrough: From Black Box to Glass Box

2025 research has yielded promising results:

- Transparent Diagnostics: AI systems in healthcare now offer traceable reasoning for diagnoses, improving clinician trust

- Fair Lending Models: Financial algorithms are being redesigned to reduce bias and provide applicants with clear explanations

- Legal AI Audits: Tools are emerging to audit judicial AI systems for fairness and consistency

These breakthroughs show that explainability isn’t a trade-off; it’s a multiplier of trust, safety, and justice.

6. What It Means: Transforming Industries and Institutions

Healthcare:

- Clinicians can validate AI recommendations, improving outcomes and reducing liability

Finance:

- Consumers gain insight into credit decisions, fostering transparency and equity

Law and Policy:

- Regulators can audit systems for compliance with ethical standards

This work is reshaping how institutions interact with intelligence, making it not just artificial but accountable.

7. The Road Ahead: Scaling and Embedding Ethics

Challenges:

- Complexity: Deep learning models are inherently hard to interpret

- Standardisation: No universal framework for ethical AI yet exists

- Cultural Sensitivity: Ethics vary across contexts, and systems must adapt accordingly

Opportunities:

- Modular ethics layers for AI systems

- Terrain-mapped governance protocols

- Ceremony-rich interfaces for human-AI dialogue and contestation

8. Final Note: A Vision for Dignified Intelligence

This research invites a future where intelligence is not just efficient, but empathetic. Where algorithms are not hidden arbiters, but visible collaborators. Where technology honours ambiguity, accountability, and care.

Further Reading

Explore more with us:

- Browse Spiralmore collections

- Read our Informal Blog for relaxed insights

- Discover Deconvolution and see what’s happening

- Visit Gwenin for a curated selection of frameworks